- DOWNLOAD SPARK 2.10. BIN HADOOP2.7 TGZ LINE COMMAND INSTALL

- DOWNLOAD SPARK 2.10. BIN HADOOP2.7 TGZ LINE COMMAND CODE

- DOWNLOAD SPARK 2.10. BIN HADOOP2.7 TGZ LINE COMMAND DOWNLOAD

- DOWNLOAD SPARK 2.10. BIN HADOOP2.7 TGZ LINE COMMAND WINDOWS

The “election” of the primary master is handled by Zookeeper. We’ll go through a standard configuration which allows the elected Master to spread its jobs on Worker nodes.

DOWNLOAD SPARK 2.10. BIN HADOOP2.7 TGZ LINE COMMAND INSTALL

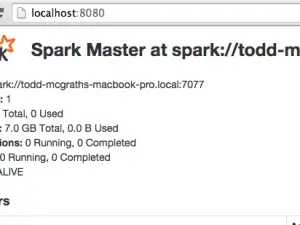

This topic will help you install Apache-Spark on your AWS EC2 cluster. Add dependencies to connect Spark and Cassandra Connect via SSH on every node except the node named Zookeeper : I am proving the R codes for this process under the comments section, if you have any questions, suggestion or critics kindly drop a comment. In my next tutorial, you get can familiar with the basic functionality of SparkR package. So, by following these simple steps we can setup and get going with SparkR quickly. Now let’s check the Spark UI using our web browser by opening the page As you can see, we have a spark job completed under the tasks. Let’s create a local data frame and then convert it into a Spark data frame. Now we are done with setup of SparkR, let’s perform a quick example to check if it is working properly. It will provide user with certain details that can be very useful. The Spark configuration helps us to look into the details about our Spark session, it is very useful if we are using a remote host. You can ignore the warning messages if you get any.

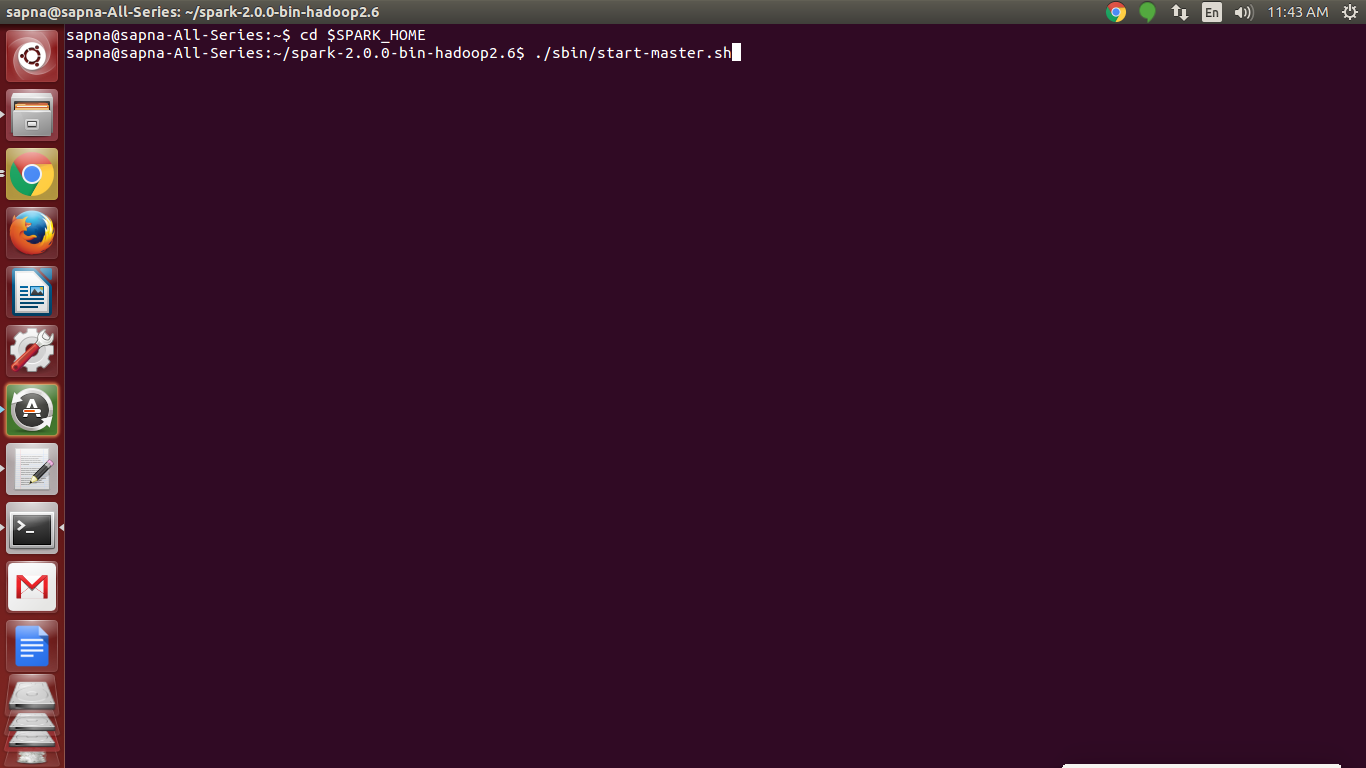

This is how the console will look like when you will run these commands. In this step, we create a Spark context and a SQL context for the local machine. Now we have the SparkR package in our library and we can use it like any other package in R. The second step includes the setting up of the path for SparkR package library.

DOWNLOAD SPARK 2.10. BIN HADOOP2.7 TGZ LINE COMMAND CODE

Use the code shown in the figure to setup the environment, change the location according to your directory. The very first step to begin with SparkR would be setting up the system environment. The few steps below will help the users to setup SparkR very quickly using Rstudio. We can save few R scripts and can have some production ready running codes which can be used for development and analysis purpose. I personally prefer this method because we don’t have to shift between Command Prompt and Rstudio every time we have to work with Spark and R. Now we can check the UI of Spark by using your web browser and opening this page Running SparkR using Rstudio. If everything is working fine you will see a message that reads “ Welcome to Spark”

Once you are in the bin directory (“ C:\spark-2.0.1\bin”) you need to enter the command “ sparkr” to run the sparkr shell.Īfter you type this command, hit “ enter” and wait for 10-15 seconds and you will see some logs on your screen. For that, you can use this command “ cd C\spark-2.0.1” in my case it is in the folder “ spark-2.0.1” once you are in the Spark directory, you need to navigate to the bin directory by using the command “ cd bin” Once you are inside the Command Prompt, you need to navigate to the directory where you have installed Spark. To start the command shell, go to your start menu and search “ CMD” now go to “ Command Prompt” and run it as administrator by right clicking on the icon and choosing the option “ Run as administrator”. Once you extract it, you will have these files in the folder – “ bin”, “conf”, “data”, “examples”, “jars”, “licenses”, “python”, “R”, “sbin”, ”yarn”, ”LICENSE”, “NOTICE”, “README.md”, “RELEASE”Īfter coping the contents into your local drive, it’s time to run SparkR using the Command Prompt. Once you have downloaded the Spark-2.0.1-bin-hadoop2.7.tgzfile, you need to create a new folder in your local drive (C:/) and extract the. Then click on Spark-2.0.1-bin-hadoop2.7.tgz which is found next to the step “ 4.

DOWNLOAD SPARK 2.10. BIN HADOOP2.7 TGZ LINE COMMAND DOWNLOAD

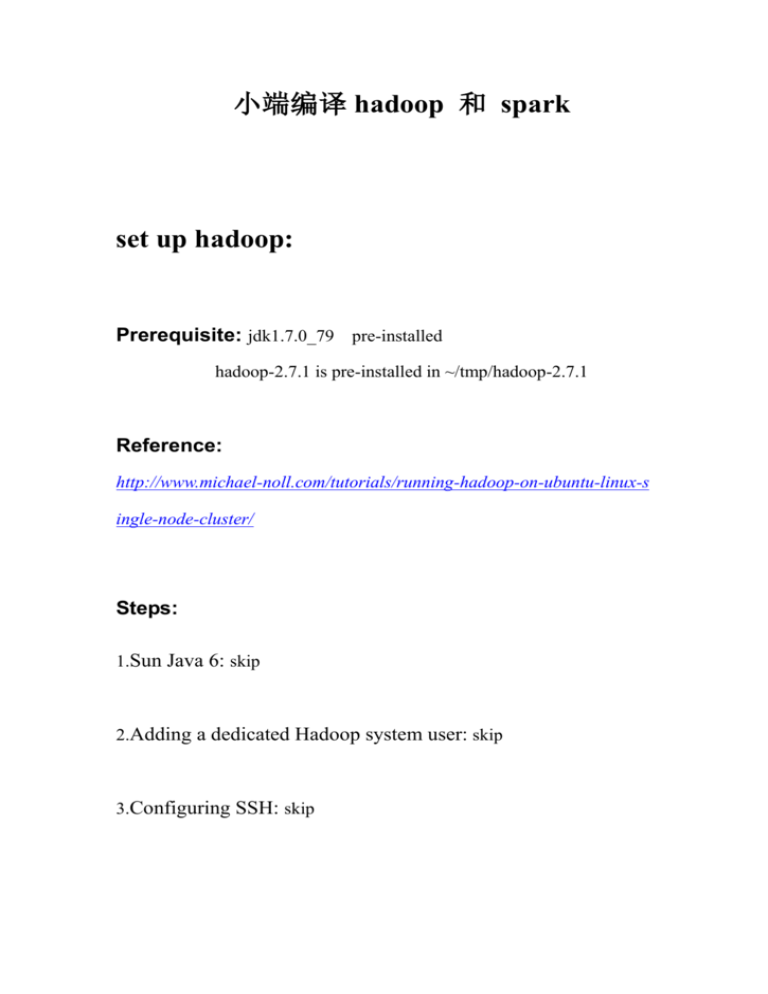

Choose a download type:” to Direct Download. Choose a package type” option, select any pre-built package type from the drop-down list and select pre-built package for Hadoop 2.7 and later. Choose a Spark release:” select the latest release and that would be 2.0.1(Oct 03 2016) “2. You have follow the certain steps to create a download link for a Spark Package of your choice. This is the official page from the Apache Spark website from where you can download Apache Spark. To download Apache Spark, use your internet browser to open this page Latest version of Java with the system environments set.SparkR is an R package that provides a front-end to use Apache Spark with R. The steps listed here will help the user to get going with SparkR quickly.

DOWNLOAD SPARK 2.10. BIN HADOOP2.7 TGZ LINE COMMAND WINDOWS

This tutorial will provide a step-by-step guide to help the users to setup and get going with Apache Spark with R on a windows platform using Rstudio and the Command Prompt.

0 kommentar(er)

0 kommentar(er)